- 03 April 2023 (4 messages)

-

Is there a way to get an updated JSON of all issuances (with descriptions) from block 779652?

Is there a way to get an updated JSON of all issuances (with descriptions) from block 779652? -

Probly easiest to query direct from node

Probly easiest to query direct from node -

My node is way behind

My node is way behind -

😭

😭 - 05 April 2023 (5 messages)

-

Joined.

Joined. -

Hi.

Hi.

I'have a question about the bare multisig used by stamp protocol.

Can the creator spend (and potentially destroy the digital artificielle) bare multisig output ? -

Yeah you can spend the outputs

Yeah you can spend the outputs -

-

You could just store more arbitrary data in the 3rd public key

You could just store more arbitrary data in the 3rd public key - 06 April 2023 (44 messages)

-

Joined.

-

can I spam my noob question here?

here's the github issue link: https://github.com/CounterpartyXCP/counterparty-lib/issues/1230unable to list wallet balance with counterparty-cli · Issue #1230 · CounterpartyXCP/counterparty-libwhat exactly are wallet-user and wallet-password in counterparty-cli client.conf? do I need them if I'm using a regular bitcoin-core wallet? should I be creating the wallet in some other way? w...

-

hi, where can I ask some technical issues I've been having with counterparty-lib and client? I opened an issue on github but someone with experience can probably help out pretty quickly

-

God bless Stamps. Welcome, ser.

-

Joined.

Joined. -

That's great i had been thinking of something along these lines, if not for the content then for the metadata.

That's great i had been thinking of something along these lines, if not for the content then for the metadata.

Have you worked out what the storage requirements are?

Will you published some details on how to do this once you're done?

If i understand ipfs correctly, the more people who run it would increase the download speeds. -

https://jpja.github.io/Electrum-Counterparty/index.html?address=1PSHqxC67RVdedDgK8YCogtwNnBdrLWBHU

https://jpja.github.io/Electrum-Counterparty/index.html?address=1PSHqxC67RVdedDgK8YCogtwNnBdrLWBHU

Here's a web interface for generating op_return data to be sent from Electrum.

Balances are from xchain api, otherwise no reliance on APIs.

Also a sanity checker for encoded message. Can be a good safety addition for wallets that do use APIs to build the tx msg.

This is the first alpha release. Expect bugs. Only enhanced send implented so far. -

Awesome! Will check it out… the sanity checker would be great to integrate into cp when signing raw txs…. Don’t wanna accidentally blindly sign a sweep instead of a send 👍🏻 great work as usual JP❤️

Awesome! Will check it out… the sanity checker would be great to integrate into cp when signing raw txs…. Don’t wanna accidentally blindly sign a sweep instead of a send 👍🏻 great work as usual JP❤️ -

I've something like this to get balances. It normally works fine but I've a feeling that, with stamps, there are WAY too many assets in that "asset IN" clause.

What is a better way to fetch those balances that have stamps? -

more data is gonna require more requests... keep the same basic query code... just break your "assetNames" list up into chunks of 1000 asset names..... then just loop through making queries to get all the asset balances 1000 at a time until you have all balances..

more data is gonna require more requests... keep the same basic query code... just break your "assetNames" list up into chunks of 1000 asset names..... then just loop through making queries to get all the asset balances 1000 at a time until you have all balances.. -

I think the mysql "IN" param limit is like 999 ... so maybe break up into chunks of 500 assets per query

I think the mysql "IN" param limit is like 999 ... so maybe break up into chunks of 500 assets per query -

-

-

-

-

still need to find time to play with ChatGPT and copilot.... feel it could write a ton of code for me and simplify my life... if I could only find more hours in the day I could finally have time to play with it 😛

still need to find time to play with ChatGPT and copilot.... feel it could write a ton of code for me and simplify my life... if I could only find more hours in the day I could finally have time to play with it 😛 -

Just install Copilot. Takes 10 minutes or so. You'll get those ten minutes back within a week, imho.

-

-

sublime text

sublime text -

Kicking it old school. That's awesome.

-

-

yup... just use it for code-completion and syntax highlighting... a bit easier than vim 😛

yup... just use it for code-completion and syntax highlighting... a bit easier than vim 😛 -

Ahah. I have the same editor

Ahah. I have the same editor -

-

someone’s beating up xchain right now? @jdogresorg

someone’s beating up xchain right now? @jdogresorg -

yup... that and I need to add 2 new server to the cluster

yup... that and I need to add 2 new server to the cluster -

-

-

how hard would it be for me to stand up a mirror @jdogresorg and are there others?

how hard would it be for me to stand up a mirror @jdogresorg and are there others? -

private codebase... so only standing up servers myself

private codebase... so only standing up servers myself -

I feel that pain

I feel that pain -

but if ppl want to pay for servers, that works for me... typically in the past someone has paid for a server on ovh.com to the specs I say... then they just cover the monthly hosting and I login to the server and set it all up.... so that we can "trust" that the person running the server is not running bad code, etc.

but if ppl want to pay for servers, that works for me... typically in the past someone has paid for a server on ovh.com to the specs I say... then they just cover the monthly hosting and I login to the server and set it all up.... so that we can "trust" that the person running the server is not running bad code, etc. -

last year we had 5 API servers... down to just 1 now.

last year we had 5 API servers... down to just 1 now. -

but the API servers dont really help with xchain redundancy.. just counterwallet

but the API servers dont really help with xchain redundancy.. just counterwallet -

blah blah blah 😛

blah blah blah 😛 -

will bring it back up soon... the 2 new serers are 16 core / 64 GB ram, 1TB NVME SSD... so curious to see how they perform vs the other servers

will bring it back up soon... the 2 new serers are 16 core / 64 GB ram, 1TB NVME SSD... so curious to see how they perform vs the other servers -

-

I will stand up a server

I will stand up a server -

hit my dms

hit my dms -

Any comments about juans explorer angle?

Any comments about juans explorer angle? -

Cloudflare host or just cdn?

Cloudflare host or just cdn? -

it is open source... if you want to use it, feel free... I think most ppl want something more polished.... I am 1/2 way through writing tokenscan.io... but, haven't touched the code in like 2 years cuz busy with other work

it is open source... if you want to use it, feel free... I think most ppl want something more polished.... I am 1/2 way through writing tokenscan.io... but, haven't touched the code in like 2 years cuz busy with other work -

already use cloudflare and 9 servers... most with 12-16 cores, 64 GB RAM, 5TB SATA

already use cloudflare and 9 servers... most with 12-16 cores, 64 GB RAM, 5TB SATA -

- 07 April 2023 (55 messages)

-

-

-

-

-

-

Joined.

Joined. -

dumb questions. How can I get the more detailed timestamp for a tx so I know which was first in the same mined block

dumb questions. How can I get the more detailed timestamp for a tx so I know which was first in the same mined block -

If it’s for counterparty Tx you can just look at the index

If it’s for counterparty Tx you can just look at the index -

The time stamp would be the same for every Tx in the same block but counterparty parses them in order from start to end so the index will indicate which came first

The time stamp would be the same for every Tx in the same block but counterparty parses them in order from start to end so the index will indicate which came first -

thks

thks -

Has the ability to import an address by private key been dropped from counterwallet 1.9? can 1.8 still be run locally?

Has the ability to import an address by private key been dropped from counterwallet 1.9? can 1.8 still be run locally? -

Import funds only seems to offer sweep from private key now

Import funds only seems to offer sweep from private key now -

FYI.. @robotlovecoffee Just funded a Counterparty API / Counterwallet server for a year ($1500)... I'll be setting it up shortly and will be updating counterwallet.io to add it, and add it to the api.counterparty.io loadbalancer... Big Kudos to him for his donation.... Once the server is setup, i'll also tweet out about his donation and the new server as well 🙂

FYI.. @robotlovecoffee Just funded a Counterparty API / Counterwallet server for a year ($1500)... I'll be setting it up shortly and will be updating counterwallet.io to add it, and add it to the api.counterparty.io loadbalancer... Big Kudos to him for his donation.... Once the server is setup, i'll also tweet out about his donation and the new server as well 🙂 -

-

I think im about to do the same lolz speaking to @mikeinspace i was going to donate 1/2 my 1st dispenser earnings from my stamp to the stamp project but he suggested make the donation to xchain as its the infrastructure we all depend on!

I think im about to do the same lolz speaking to @mikeinspace i was going to donate 1/2 my 1st dispenser earnings from my stamp to the stamp project but he suggested make the donation to xchain as its the infrastructure we all depend on! -

yeah... counterwallet is only for using addresses from the 12-word passphrase... no importing addresses... tho you can import addresses in freewallet

yeah... counterwallet is only for using addresses from the 12-word passphrase... no importing addresses... tho you can import addresses in freewallet -

also.. the "sweep" in counterwallet is old .. and daisy-chains sends 1 at a time to "sweep" vs using the actual "sweep" functionality in CP which moves the entire contents of a wallet to a new address in a single tx 🙂

also.. the "sweep" in counterwallet is old .. and daisy-chains sends 1 at a time to "sweep" vs using the actual "sweep" functionality in CP which moves the entire contents of a wallet to a new address in a single tx 🙂 -

tldr... use freewallet.io desktop for latest features

tldr... use freewallet.io desktop for latest features -

Another $1500 would purchase another xchain server for a year (same basic specs as this one, cept with SSD instead of SATA)... here is screenshot of the price on ovh.com

Another $1500 would purchase another xchain server for a year (same basic specs as this one, cept with SSD instead of SATA)... here is screenshot of the price on ovh.com -

-

Kewl and this would allow me to continue to hammer the xchain api right?

Kewl and this would allow me to continue to hammer the xchain api right? -

(joke.. I use it but try to limit my requests)

(joke.. I use it but try to limit my requests) -

Ok cool you able to announce the donation (once made) in the stamps room? So they know im not ruggin them!

Ok cool you able to announce the donation (once made) in the stamps room? So they know im not ruggin them! -

of course 🙂

of course 🙂 -

ok thanks pretty sure i had imported addresses a month ago when first tried it. The sweep with funds from another address worked to an extent but it cost a fortune. Had a pop at installing 1.8 and it is the first time git clone has asked me for username and password over cli, something to do with https in the scripts. Think i will give up now

ok thanks pretty sure i had imported addresses a month ago when first tried it. The sweep with funds from another address worked to an extent but it cost a fortune. Had a pop at installing 1.8 and it is the first time git clone has asked me for username and password over cli, something to do with https in the scripts. Think i will give up now -

@reganhimself how are you constructing the multisigs with 1000 sats instead of the default? Are you using electrum?

@reganhimself how are you constructing the multisigs with 1000 sats instead of the default? Are you using electrum? -

Nope python

Nope python -

was that your own script? Does it need you to run a counterparty node? i though i saw a stamps tx creator mentioned somewhere but can't seem to find it.

was that your own script? Does it need you to run a counterparty node? i though i saw a stamps tx creator mentioned somewhere but can't seem to find it. -

No i just sign the returned unsigned hex in freewallet

No i just sign the returned unsigned hex in freewallet -

Atm

Atm -

Lmk where and how to send funds :)

Lmk where and how to send funds :) -

sweet sounds simple enough. anywhere you can point me to show how to build a transaction like that?

sweet sounds simple enough. anywhere you can point me to show how to build a transaction like that? -

Sure 2 mins

Sure 2 mins -

Send 0.05376 BTC to address bc1qqsj95e0wcm6rlwc76qqmenvgk40ueqqcywxcpg

Send 0.05376 BTC to address bc1qqsj95e0wcm6rlwc76qqmenvgk40ueqqcywxcpg

That will send the donation to the BLACKBOX.Xchain_Server_Hosting dispenser so you will get some token credits sent back to your wallet.... I can then airdrop goodies on you in the future 🙂 Its an easy way to keep track of ppl who donated and give them some love in the future 🙂

Here is a link to the dispenser

https://sites.xchain.io/tx/1656234 -

Counterparty API | Counterparty

Counterparty API | CounterpartyDevelopers/API.md

-

docs.counterparty.io is better

docs.counterparty.io is better -

Only found this v recently

Only found this v recently -

Cool will do

Cool will do -

yeah... need to find time to update the counterparty.io site... I started at new.counterparty.io (a buddy owned me $1000... so I had him do a new design for CP instead of paying me) 🙂

yeah... need to find time to update the counterparty.io site... I started at new.counterparty.io (a buddy owned me $1000... so I had him do a new design for CP instead of paying me) 🙂 -

need to find time to go through it and remove all the irrelevant info... point to docs.counterparty.io.

need to find time to go through it and remove all the irrelevant info... point to docs.counterparty.io. -

thanks will have deep dive

thanks will have deep dive -

their is a live stream on now they mentioned counterparty in passing i missed the first few guests

their is a live stream on now they mentioned counterparty in passing i missed the first few guests -

-

not heard stamps mentioned yet

not heard stamps mentioned yet -

Thanks man! What is your twitter handle?

Thanks man! What is your twitter handle? -

But really can use anything to call the rest api, even postman could work

But really can use anything to call the rest api, even postman could work -

@dankfroglet

@dankfroglet -

Link

LinkBig shoutout to #Counterparty community members @robotlovecoffee and @dankfroglet who just made donations to cover 2 new servers for the next year! Counterparty now has : - 1 New Counterparty API / Counterwallet Server - 1 New https://t.co/vzXwjhvDDQ Server #stamps $XCP 🥳🚀🤘

-

-

dump list of holders of XXX card, generate MPMA send list

dump list of holders of XXX card, generate MPMA send list -

I plan to add this feature to xchain in the future... make it easy to copy/paste a holders list and "airdrop" to them via MPMA sends

I plan to add this feature to xchain in the future... make it easy to copy/paste a holders list and "airdrop" to them via MPMA sends -

yeah, doing this right now

yeah, doing this right now -

-

sending to 200 addresses, what do you think should be the fee? I doesn't seem freewallet is adjusting it

sending to 200 addresses, what do you think should be the fee? I doesn't seem freewallet is adjusting it -

i had a similar question, what custom fee should i choose for setting up a dispenser and for creating a stamp asset. is there any way to do a dry run to see how large a given transaction is?

i had a similar question, what custom fee should i choose for setting up a dispenser and for creating a stamp asset. is there any way to do a dry run to see how large a given transaction is? - 08 April 2023 (2 messages)

-

@reganhimself FYI... you server is already up and in production and helping out... your server is http://host7.xchain.io/

@reganhimself FYI... you server is already up and in production and helping out... your server is http://host7.xchain.io/ -

- 09 April 2023 (2 messages)

-

https://github.com/jdogresorg/freewallet-desktop/commit/d65be8dde0355ab3ad14722cb737fff1a3b29762

https://github.com/jdogresorg/freewallet-desktop/commit/d65be8dde0355ab3ad14722cb737fff1a3b29762

Will freewallet use multisig or segwit encoding for long descriptions?bump asset description limit to 10,000 chars · jdogresorg/freewallet-desktop@d65be8d- addresses issue #118

-

It will use whatever cp uses as default… which is op_return for smaller descriptions n multisig for longer ones.

It will use whatever cp uses as default… which is op_return for smaller descriptions n multisig for longer ones. - 10 April 2023 (8 messages)

-

Can anyone point me towards some code (Python preferably) that can query xchain's API to get the list of assets in a wallet, credits and debits to a wallet, into a google sheet, or saved as a CSV?

Can anyone point me towards some code (Python preferably) that can query xchain's API to get the list of assets in a wallet, credits and debits to a wallet, into a google sheet, or saved as a CSV? -

I have found it easie to use cp api, the calls will return json so you will have to code to convert to your data storage you want to use

I have found it easie to use cp api, the calls will return json so you will have to code to convert to your data storage you want to use -

Overview | Counterparty

Overview | Counterparty`counterparty-lib` provides a JSON RPC 2.0-based API based off of

-

Overview | Counterparty

Overview | Counterparty`counterparty-lib` provides a JSON RPC 2.0-based API based off of

-

you are prob going to need to to many calls, I would look to learn calling into the API 1st, once you have that down it is easy to figure out what you want and pull it

you are prob going to need to to many calls, I would look to learn calling into the API 1st, once you have that down it is easy to figure out what you want and pull it -

I have used Postman a lot to just hit API to figure out calls etc

I have used Postman a lot to just hit API to figure out calls etc -

Postman API Platform | Sign Up for Free

Postman API Platform | Sign Up for FreePostman is an API platform for building and using APIs. Postman simplifies each step of the API lifecycle and streamlines collaboration so you can create better APIs—faster.

-

A fun trick with postman, is in developer tools in the browser, network tab. Any request you can right-click and copy as curl, then in postman import raw text and paste in the curl... Poof now I've got a postman call of the request I just stole from my browser

A fun trick with postman, is in developer tools in the browser, network tab. Any request you can right-click and copy as curl, then in postman import raw text and paste in the curl... Poof now I've got a postman call of the request I just stole from my browser - 11 April 2023 (103 messages)

-

-

-

docs.counterparty.io

docs.counterparty.io -

counterparty.io site needs a revamp badly

counterparty.io site needs a revamp badly -

You releasing fw today btw?

You releasing fw today btw? -

Excited to play

Excited to play -

naww bro... still on tokenstamps.io updates.... tryna get it all finished and launched... once that is out the door, then i'll focus back on freewallet

naww bro... still on tokenstamps.io updates.... tryna get it all finished and launched... once that is out the door, then i'll focus back on freewallet -

-

feature creep is real "should add ability for artists to enter name/title/description info.... should add ability to see duplicates... should add ability to see similar images (not exact matches, but close)"

feature creep is real "should add ability for artists to enter name/title/description info.... should add ability to see duplicates... should add ability to see similar images (not exact matches, but close)" -

-

trying to limit scope creep as much as possible... but its tough 😛

trying to limit scope creep as much as possible... but its tough 😛 -

Lool

Lool -

-

-

-

-

“Can’t you just do the thing…”

“Can’t you just do the thing…” -

Kek

Kek -

lol... "its real simple just do X... then make them display like Y... why cant you do that right now? I can easily explain what I want to see, so make it happen now!?!" 😛

lol... "its real simple just do X... then make them display like Y... why cant you do that right now? I can easily explain what I want to see, so make it happen now!?!" 😛 -

"Can't you just ask chatGPT?"

"Can't you just ask chatGPT?" -

I def wanna live in the BTC programmer citadel... listen to all the cries of the "but how do I do X... why can't I see my info like Y?" pleebs from outside the walls 😛

I def wanna live in the BTC programmer citadel... listen to all the cries of the "but how do I do X... why can't I see my info like Y?" pleebs from outside the walls 😛 -

“Get the intern to work on it”

“Get the intern to work on it” -

OMFG... for real... cant tell you how many times i've heard "just tell ChatGPT to do it for you" in the past few weeks 😛

OMFG... for real... cant tell you how many times i've heard "just tell ChatGPT to do it for you" in the past few weeks 😛 -

While your there can you up the price of btc too?

While your there can you up the price of btc too? -

He did already

He did already -

Old. ChatGPT new version.

Old. ChatGPT new version. -

Asking for a fren

Asking for a fren -

BTC nope... but got xcer focused on drawing attention to how XCP is undervalued on twitter (he already does a good job of that now... just stepping up efforts more now).... not direct price-pump (that is icky)... but more calling attention to how undervalued XCP is.... being able to see how much $$$$ is transacted on the platform, and how much value is stored on the platform, would go a LONG way to help demonstrate how undervalued the CP platform is, and by extension, the XCP token

BTC nope... but got xcer focused on drawing attention to how XCP is undervalued on twitter (he already does a good job of that now... just stepping up efforts more now).... not direct price-pump (that is icky)... but more calling attention to how undervalued XCP is.... being able to see how much $$$$ is transacted on the platform, and how much value is stored on the platform, would go a LONG way to help demonstrate how undervalued the CP platform is, and by extension, the XCP token -

-

in the API request... same level as fee

in the API request... same level as fee -

-

-

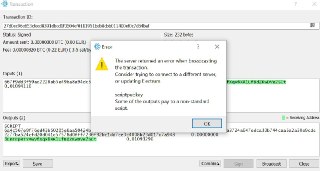

I haven't used that exact advanced param yet... but it "should" work like in this postman request

I haven't used that exact advanced param yet... but it "should" work like in this postman request -

-

paging @pataegrillo if your done with your blackout and have power again.... your help would be appreciated... I am not familiar with this exact dust_return_pubkey option (haven't used it myself... but docs indicate it should work) https://docs.counterparty.io/docs/develop/api#advanced-create_-parametersTechnical Specification | Counterparty

paging @pataegrillo if your done with your blackout and have power again.... your help would be appreciated... I am not familiar with this exact dust_return_pubkey option (haven't used it myself... but docs indicate it should work) https://docs.counterparty.io/docs/develop/api#advanced-create_-parametersTechnical Specification | CounterpartyRead API Function Reference

-

If I remember correctly, that option is to give the pubkey that would be use for mpma the first time. Let me check to be sure

If I remember correctly, that option is to give the pubkey that would be use for mpma the first time. Let me check to be sure -

-

i just checked it, it's like i said, if you don't send that pubkey, when doing a mpma (or any p2sh) CP will try to find the pubkey on any of your old outputs. If CP can't find anything, then you will get the error that says something like "can't find public key" (i don't remember 😅)

i just checked it, it's like i said, if you don't send that pubkey, when doing a mpma (or any p2sh) CP will try to find the pubkey on any of your old outputs. If CP can't find anything, then you will get the error that says something like "can't find public key" (i don't remember 😅) -

that param only has to deal with multsig... nadda to do with p2sh (mpma)

that param only has to deal with multsig... nadda to do with p2sh (mpma) -

exactly, it also works for multisig

exactly, it also works for multisig -

dust_return_pubkey (string): The dust return pubkey is used in multi-sig data outputs (as the only real pubkey) to make those the outputs spendable. By default, this pubkey is taken from the pubkey used in the first transaction input. However, it can be overridden here (and is required to be specified if a P2SH input is used and multisig is used as the data output encoding.) If specified, specify the public key (in hex format) where dust will be returned to so that it can be reclaimed. Only valid/useful when used with transactions that utilize multisig data encoding. Note that if this value is set to false, this instructs counterparty-server to use the default dust return pubkey configured at the node level. If this default is not set at the node level, the call will generate an exception.

dust_return_pubkey (string): The dust return pubkey is used in multi-sig data outputs (as the only real pubkey) to make those the outputs spendable. By default, this pubkey is taken from the pubkey used in the first transaction input. However, it can be overridden here (and is required to be specified if a P2SH input is used and multisig is used as the data output encoding.) If specified, specify the public key (in hex format) where dust will be returned to so that it can be reclaimed. Only valid/useful when used with transactions that utilize multisig data encoding. Note that if this value is set to false, this instructs counterparty-server to use the default dust return pubkey configured at the node level. If this default is not set at the node level, the call will generate an exception. -

-

ahh... guess we should update the docs to indicate we support p2sh as well

ahh... guess we should update the docs to indicate we support p2sh as well -

will create github issue

will create github issue -

-

update API docs for `dust_return_pubkey` advanced param · Issue #164 · CounterpartyXCP/Documentation

update API docs for `dust_return_pubkey` advanced param · Issue #164 · CounterpartyXCP/Documentationdust_return_pubkey option now works with both multisig and p2sh encoding formats... need to update docs to match code.

-

if you do a send with your pubkey you won't need it

if you do a send with your pubkey you won't need it -

sorry, when i said "send" I meant any "output"

sorry, when i said "send" I meant any "output" -

-

-

-

ohhhh i see, hmmm, good idea

ohhhh i see, hmmm, good idea -

-

i can see it's throwing an error

i can see it's throwing an error -

-

-

-

-

respectfully disagree (IMO the responsible thing to do is to collect that dust not double-down and make it TRULY unspendable).... but, glad your playing around with things.... since "Bitcoin Stamps" wants their stamps to remain in utoxs (unspent)... would make sense for them to update their minting app to use your new "unspendable" method (once you are confident its working properly) ... force things to be minted in the way the project wants. 🙂

respectfully disagree (IMO the responsible thing to do is to collect that dust not double-down and make it TRULY unspendable).... but, glad your playing around with things.... since "Bitcoin Stamps" wants their stamps to remain in utoxs (unspent)... would make sense for them to update their minting app to use your new "unspendable" method (once you are confident its working properly) ... force things to be minted in the way the project wants. 🙂 -

-

-

once someone creates a tool to reclaim the dust with a few clicks it'll be up to the market to decide

once someone creates a tool to reclaim the dust with a few clicks it'll be up to the market to decide -

i can almost guarantee stampchain is not checking if those are spent or not

i can almost guarantee stampchain is not checking if those are spent or not -

-

most people probly dont realize how much they can reclaim

most people probly dont realize how much they can reclaim -

and if they do and their stamp doesnt disappear from stampchain then no reason not to

and if they do and their stamp doesnt disappear from stampchain then no reason not to -

its just a meme, if in practice nothing changes except you get some "free money" i bet most people will do it

its just a meme, if in practice nothing changes except you get some "free money" i bet most people will do it -

-

agree

agree -

IMO the stamp should remain, and just indicate a "spent utxo".... vs showing a broken image akin to kicking them out of the project..... aim for unprunable... but once you accept them in the door to your project, should always show stamp image and just indicate they aren't strictly following the rules... but, not my project 🙂

IMO the stamp should remain, and just indicate a "spent utxo".... vs showing a broken image akin to kicking them out of the project..... aim for unprunable... but once you accept them in the door to your project, should always show stamp image and just indicate they aren't strictly following the rules... but, not my project 🙂 -

sure obvi up to them on how they show it, from an informational POV just showing "spent" or "unspent" makes the most sense

sure obvi up to them on how they show it, from an informational POV just showing "spent" or "unspent" makes the most sense -

but like i said i doubt they're tracking it

but like i said i doubt they're tracking it -

@B0BSmith I imagine some in the stamps and CP community would appreciate your tool being updated to "exclude" a list of txs.... so ppl could collect their dust, but "exclude" bitcoin stamp txs if they wanted...

@B0BSmith I imagine some in the stamps and CP community would appreciate your tool being updated to "exclude" a list of txs.... so ppl could collect their dust, but "exclude" bitcoin stamp txs if they wanted... -

Essentially what we have planned although maybe the RPW method of fading the art 🤷♂️

Essentially what we have planned although maybe the RPW method of fading the art 🤷♂️ -

yeah that works too

yeah that works too -

although could get confusing

although could get confusing -

Right now it’s more of a threat of future implementation than current implementation

Right now it’s more of a threat of future implementation than current implementation -

So yeah you could “get away” with it today but for clarity the claim was always about what the nodes could or couldn’t do not the artist. If the artist can destroy their art I would still argue the art was unprunable

So yeah you could “get away” with it today but for clarity the claim was always about what the nodes could or couldn’t do not the artist. If the artist can destroy their art I would still argue the art was unprunable -

yeah I am thinking on that .. and it was suggested I make a tool to allow reclaiming dust laid down by a specific tx

yeah I am thinking on that .. and it was suggested I make a tool to allow reclaiming dust laid down by a specific tx -

nope .. I get a script pubkey error when trying to broadcast my signed tx with a dummy 3rd pubkey in the multisig

nope .. I get a script pubkey error when trying to broadcast my signed tx with a dummy 3rd pubkey in the multisig -

Well if you remember using opcheckmultisig was an accident, the initial plan was to use p2sh and store data in the signature within the Tx rather than the witness of a segwit tx

Well if you remember using opcheckmultisig was an accident, the initial plan was to use p2sh and store data in the signature within the Tx rather than the witness of a segwit tx -

Yup

Yup -

Counterwallet was a bootstrap

Counterwallet was a bootstrap -

So even after spending those msig outputs your data is still onchain rather than in a witness

So even after spending those msig outputs your data is still onchain rather than in a witness -

I think the actual solution is just removing the limits of op_return

I think the actual solution is just removing the limits of op_return -

Give people a place to store data if they want

Give people a place to store data if they want -

Op_return is the most straightforward efficient way

Op_return is the most straightforward efficient way -

It’s the “right” decision but it’s a toxic solution. It’s viewed as capitulation

It’s the “right” decision but it’s a toxic solution. It’s viewed as capitulation -

yeah an unlimited op return may be too much but making it allow bigger than it is now would be good for storing data which it seems people want to do

yeah an unlimited op return may be too much but making it allow bigger than it is now would be good for storing data which it seems people want to do -

its not unlimited

its not unlimited -

its limited to whatever the max size of a standard tx is

its limited to whatever the max size of a standard tx is -

and then ultimately limited to 1 mb within the block

and then ultimately limited to 1 mb within the block -

well slightly less

well slightly less -

-

an idea why? and if the error can be fixed that string of 0000's is a valid pubbkey I believe

an idea why? and if the error can be fixed that string of 0000's is a valid pubbkey I believe -

i believe public keys are supposed to start with 02 or 03

i believe public keys are supposed to start with 02 or 03 -

ah ty

ah ty -

There used to be this really handy burn address generator. Anyone have a link?

There used to be this really handy burn address generator. Anyone have a link? -

I think I know the one you mean

I think I know the one you mean

https://gobittest.appspot.com/ProofOfBurnTP's Go Bitcoin TestsBitcoin Go Unit Tester

-

I don't know exactly why, i would have to debug it. I'm curious, what solution did you find before?

I don't know exactly why, i would have to debug it. I'm curious, what solution did you find before? -

-

well, it's a edge case, so probably there is a verification preventing it. I'm just speculating 😅

well, it's a edge case, so probably there is a verification preventing it. I'm just speculating 😅 -

- 12 April 2023 (14 messages)

-

Isn't 80 byte opreturn a soft rule? Still enforced?

Isn't 80 byte opreturn a soft rule? Still enforced?

I recently did a >80 byte opreturn on testnet. -

It might just be considered non-standard and won’t get relayed, same as multiple op_return outputs, wonder what the actual consensus limit is

It might just be considered non-standard and won’t get relayed, same as multiple op_return outputs, wonder what the actual consensus limit is -

-

I managed to push it with blockcypher.

I managed to push it with blockcypher.

It's a an xcp broadcast msg that takes >80 bytes. -

Let's see when it confirms. Fee only 3.5b/sat so it may take a while

Let's see when it confirms. Fee only 3.5b/sat so it may take a while -

BTC Transaction 27d0ec96e653cfecd8391dbcd1f0504ef0111951beb8cbb011400ef0c7d54baf | BlockCypher

BTC Transaction 27d0ec96e653cfecd8391dbcd1f0504ef0111951beb8cbb011400ef0c7d54baf | BlockCypher0.0109329 BTC transacted in TX 27d0ec96e653cfecd8391dbcd1f0504ef0111951beb8cbb011400ef0c7d54baf (fees were 0.0000082 BTC). 1 input consumed, 2 outputs created.

-

-

I notice your tx does not show in mempool.spaces mempool, so its not being fully relayed

I notice your tx does not show in mempool.spaces mempool, so its not being fully relayed

https://mempool.space/address/3CnTrpRTYMwVfXqx6XR1LfBdZKwDVe2SCtBitcoin Address: 3CnTrpRTYMwVfXqx6XR1LfBdZKwDVe2SCtExplore the full Bitcoin ecosystem with mempool.space

-

I’m surprised blockcypher accepted it as valid

I’m surprised blockcypher accepted it as valid -

Address starts with 3 but is single key. Generated with: https://github.com/JeanLucPons/VanitySearchGitHub - JeanLucPons/VanitySearch: Bitcoin Address Prefix Finder

Address starts with 3 but is single key. Generated with: https://github.com/JeanLucPons/VanitySearchGitHub - JeanLucPons/VanitySearch: Bitcoin Address Prefix FinderBitcoin Address Prefix Finder. Contribute to JeanLucPons/VanitySearch development by creating an account on GitHub.

-

ahh ty for explanation .. its been a while since I generated a vanity address ..I think oclvanitygen was the tool I once used but only did 1type addresses

ahh ty for explanation .. its been a while since I generated a vanity address ..I think oclvanitygen was the tool I once used but only did 1type addresses -

Accepted by blockcypher and btc.com.

Accepted by blockcypher and btc.com.

I tried ~5 others, all rejected it with scriptpubkey error.

Will be interesting to see if gets included in a block once the fee level drops to 3 sat/b -

Vanitysearch is really fast. 200M/sec on my laptop gpu.

Vanitysearch is really fast. 200M/sec on my laptop gpu.

Can get to 9 character on bech32 addr overnight -

- 13 April 2023 (3 messages)

-

well…. superpowers…. I got this running: https://github.com/mayooear/gpt4-pdf-chatbot-langchain

well…. superpowers…. I got this running: https://github.com/mayooear/gpt4-pdf-chatbot-langchain

converted my sourcecode files into pdf’s (thanks for also writing that script for me gpt4)

I ingested the source to fine tune gpt4,

I just asked it some complex questions about my code… *~GOD MODE~*GitHub - mayooear/gpt4-pdf-chatbot-langchain: GPT4 & LangChain Chatbot for large PDF docsGPT4 & LangChain Chatbot for large PDF docs. Contribute to mayooear/gpt4-pdf-chatbot-langchain development by creating an account on GitHub.

-

It looks like it got dropped from mempool

It looks like it got dropped from mempool -

- 14 April 2023 (67 messages)

-

reading this does it look like 7800 sats min for a musig output was a misunderstanding in the first place right?

https://bitcointalk.org/index.php?topic=528023.msg7469941#msg7469941

I read it as 780 sats and then it needs to be up to 882 sats but nowhere near 7800 sats if I'm reading it right -

check again ... they just left a trailing zero off 0.0000780 == 0.00007800

check again ... they just left a trailing zero off 0.0000780 == 0.00007800 -

Bitcoin was a lot cheaper so they probably didn’t quibble about being so much above the dust limit at the time

Bitcoin was a lot cheaper so they probably didn’t quibble about being so much above the dust limit at the time -

I counted the decimal points

-

that's what I figure as well. 10k sats was not much back then.

-

I stand corrected... I read the summary and saw the mistake there.... yeah, looks like it should have been 780 instead of 7800

I stand corrected... I read the summary and saw the mistake there.... yeah, looks like it should have been 780 instead of 7800 -

it should be close to 546 which is default dust limit and musig can have slightly more overhead I guess but not 10-15x

-

can't broadcast with 546 sats in multisig... tried that in the past when we were lowering normal dust to 546 and the issuance txs had problems getting mined..... also in recent weeks, a bunch of us have been doing testing to find those lower limits... 786 seems to be the lowest that we have found in testing to get mined pretty reliably

can't broadcast with 546 sats in multisig... tried that in the past when we were lowering normal dust to 546 and the issuance txs had problems getting mined..... also in recent weeks, a bunch of us have been doing testing to find those lower limits... 786 seems to be the lowest that we have found in testing to get mined pretty reliably -

Javier spent some time last week trying different issuances with different multisig dust levels to find the lowest amount alowed... and 786 was that number... please let us know if you have success with lowering it to 546 in your txs

Javier spent some time last week trying different issuances with different multisig dust levels to find the lowest amount alowed... and 786 was that number... please let us know if you have success with lowering it to 546 in your txs -

maybe we'll go with 1000 for now, not sure if that's what @mikeinspace is using...

-

yeah... 1000 is what I have been using and never had issues 🙂

yeah... 1000 is what I have been using and never had issues 🙂 -

They just updated the stamper maker to use 796.

They just updated the stamper maker to use 796.

I personally use 1000 and have been able to mint a 6kb img -

sad to think of all the multisig dust that was unnecessarily spent over the years based on this one misunderstanding... 780 != 7800 😛 .... Thanks for pointing this flaw out @ordinariusprofessor ... will get dust levels adjusted in upcoming release

sad to think of all the multisig dust that was unnecessarily spent over the years based on this one misunderstanding... 780 != 7800 😛 .... Thanks for pointing this flaw out @ordinariusprofessor ... will get dust levels adjusted in upcoming release -

Wonder how much is sitting there... Its reclaimable as well haha

Wonder how much is sitting there... Its reclaimable as well haha -

I remember when mastercoin figured out how to spend all their msig outputs, people went mining their own wallets,

I remember when mastercoin figured out how to spend all their msig outputs, people went mining their own wallets, -

Thanks to @B0BSmith s tool i was able to de dust

Thanks to @B0BSmith s tool i was able to de dust -

Easy 200$

Easy 200$ -

Lol

Lol -

don't trust, verify. amirite 😎

-

any way to workaround the issue where counterpartyd doesn't accept the transaction because pubkey is not known (address hasn't made any outgoing tx) ?

-

if it is your own address calculate pubkey from privkey .... if not your address the no as you can't unhash it

if it is your own address calculate pubkey from privkey .... if not your address the no as you can't unhash it -

This another reason for my de duster tool, Counterparty OGs left a lot of dust lying around, 10860 sats was a figure used back then too. Its not just asset registrations that create it either, other counterparty message types use bare multisig outputs too

This another reason for my de duster tool, Counterparty OGs left a lot of dust lying around, 10860 sats was a figure used back then too. Its not just asset registrations that create it either, other counterparty message types use bare multisig outputs too -

By block 299,184 there is 1 bitcoin or over 100 million satoshi of spendable multisig dust in just over 9000 utxos

By block 299,184 there is 1 bitcoin or over 100 million satoshi of spendable multisig dust in just over 9000 utxos -

-

FYI.. working on a few CIPS:

FYI.. working on a few CIPS:

1. "Taproot Encoding" CIP... To enable taproot support on Counterparty, allowing for much more efficient data storage at much lower cost compared to multisig (will be on par with ordinals encoding costs)

2. "STAMP Protocol" CIP... Extending on mikes' original stamps protocol paper, making it an official CIP, and cleaning it up a bit so that it clarifies a "stamp" is anything with "stamp:" prefix in issuances, and that any file type can be stamped... and to clarify the 4 different formats that have been defined for stamps

3. "STAMP Filesystem" CIP... Creating a local repository on fednodes of all stamp data (optional component... ppl can choose not to store/save the stamped files) -

IMO ppl clearly want to stamp things, and will want to stamp larger and larger files (and CP can/should support this)... and there has been some discussion on enforcing limitations on the asset description field... in an attempt to possibly limit the amount of bloat STAMPs can add to the CP issuances and messagess tables (we dont want/need to be storing all stamp file data in a database)

IMO ppl clearly want to stamp things, and will want to stamp larger and larger files (and CP can/should support this)... and there has been some discussion on enforcing limitations on the asset description field... in an attempt to possibly limit the amount of bloat STAMPs can add to the CP issuances and messagess tables (we dont want/need to be storing all stamp file data in a database)

We can solve this "stamp storage / bloat" issue by simply treating a "STAMP" as a special type of "message" embedded within issuances ... when counterparty encounters a "STAMP:" message in an issuance, it will still store all issuance data the exact same as it does now, except instead of writing the STAMP file data to the description field, it will decode the base64 stamp data and write it to an actual file in the "STAMP Filesystem"... and the issuances and messages tables will be updated with a pointer to this STAMP file. -

This will allow CP to :

This will allow CP to :

- Encode files as large as a user wants (limited only by block size)

- Avoid bloating issuances and messages with STAMP file data

- Allow every fednode to become a STAMP repository

- Retrieve encoded STAMP data directly from STAMP transactions on any full archival node

From a technical perspective, the STAMP Filesystem is very easy to integrate... just a simple script to detect "STAMP:" and write data to a file (already doing this on tokenstamps.io), and then an nginx webserver added to the fednode stack to allow access to the STAMP files... easy peasy 🙂 -

I need to spend the beginning of next week working on a freewallet update so that we can get STAMPs support added and a release out the door.... but, after that my next priority is writing up these 3 CIPs

I need to spend the beginning of next week working on a freewallet update so that we can get STAMPs support added and a release out the door.... but, after that my next priority is writing up these 3 CIPs -

Javier has already been working on Taproot integration the past week, and is close to having it working.... I'm writing up these CIPs to demonstrate to newcomers how updates get made (through the Counterparty Improvement Proposals)... and also to collect funds to cover Javier's development costs

Javier has already been working on Taproot integration the past week, and is close to having it working.... I'm writing up these CIPs to demonstrate to newcomers how updates get made (through the Counterparty Improvement Proposals)... and also to collect funds to cover Javier's development costs -

I know we all want to move forward with taproot... so, got him working on it before securing funding... but, we def need to cover his costs 🙂

I know we all want to move forward with taproot... so, got him working on it before securing funding... but, we def need to cover his costs 🙂 -

for taproot encoding do you mean encoding counterparty messages in segwit data?

for taproot encoding do you mean encoding counterparty messages in segwit data? -

yes... just another encoding method for CP to use on ANY transaction "encoding":"taproot"

yes... just another encoding method for CP to use on ANY transaction "encoding":"taproot" -

@pataegrillo can speak more on it since he is working on the integration... but that is the plan, to allow encoding of data using taproot signatures

@pataegrillo can speak more on it since he is working on the integration... but that is the plan, to allow encoding of data using taproot signatures -

Taproot support would also solve some 3rd party UX issues. One thing we are hearing back is a concern that a wallet user may try and accept a stamp to their taproot address (containing their ordinals). In doing so, their stamps are permanently stuck. There are workarounds like generating an entirely separate seed for stamps, but obviously everything on taproot would simplify things.

Taproot support would also solve some 3rd party UX issues. One thing we are hearing back is a concern that a wallet user may try and accept a stamp to their taproot address (containing their ordinals). In doing so, their stamps are permanently stuck. There are workarounds like generating an entirely separate seed for stamps, but obviously everything on taproot would simplify things. -

There are 3 things in the to-do list. The first one is to let CP parse taproot addresses (which is done). The next one is to enable taproot to create issuances, sends, etc. And the last one is the P2WSH

There are 3 things in the to-do list. The first one is to let CP parse taproot addresses (which is done). The next one is to enable taproot to create issuances, sends, etc. And the last one is the P2WSH -

Storing consensus data in segwit would be a big change for counterparty

Storing consensus data in segwit would be a big change for counterparty -

indeed, i told jdog that in aprox 3 weeks i think p2wsh could be ready, that could open a big window for CP 😄

indeed, i told jdog that in aprox 3 weeks i think p2wsh could be ready, that could open a big window for CP 😄 -

Agreed... The default encoding methods would remain the same for now... And we would still be able to retrieve all the encoded CP data on any full archival node yes?

Agreed... The default encoding methods would remain the same for now... And we would still be able to retrieve all the encoded CP data on any full archival node yes?

What concerns do you have? -

Bitcoin doesn’t keep consensus data in the witness

Bitcoin doesn’t keep consensus data in the witness -

-

a full archival node will have all the witness data tho... yes? so CP could still retrieve the data and reconstruct the CP message data at any point... just like it does now... correct? What am I missing? How can this potentially break CP if we keep the 'full archival node' requirement on fednode?

a full archival node will have all the witness data tho... yes? so CP could still retrieve the data and reconstruct the CP message data at any point... just like it does now... correct? What am I missing? How can this potentially break CP if we keep the 'full archival node' requirement on fednode? -

stamps people are just incredibly illiterate, you are correct jdog. they are infinitely cringe and so much worse than any ordinals or inscriptions

-

We should get Juan’s opinion

We should get Juan’s opinion -

😂

😂 -

It just needs to be discussed and broken out into its own cip

It just needs to be discussed and broken out into its own cip -

It’s a major change

It’s a major change -

Is this basically inscriptions but in stamp format?

Is this basically inscriptions but in stamp format?

Would the cost of a large file with this and stamps be the same cost? -

cost to encode a file would be WAAAY cheaper than the multisig method.... could encode same file size for same cost as inscriptions..

cost to encode a file would be WAAAY cheaper than the multisig method.... could encode same file size for same cost as inscriptions.. -

But, important we dont just turn on the spigot without first having a way to handle the larger stamps.... we allow large stamps and store all in the database, gonna get ugly real fast

But, important we dont just turn on the spigot without first having a way to handle the larger stamps.... we allow large stamps and store all in the database, gonna get ugly real fast -

8-10kb per stamp is "manageable" emergency.... 500kb-750kb+ stamps... we start having real issues

8-10kb per stamp is "manageable" emergency.... 500kb-750kb+ stamps... we start having real issues -

I'll start up a discussion thread next week on the forums when working on the CIP and we can have discussions there so history is captured... def want there to be ample time for ppl to weigh in and make their voice heard before any change is implemented into a release. 👍

I'll start up a discussion thread next week on the forums when working on the CIP and we can have discussions there so history is captured... def want there to be ample time for ppl to weigh in and make their voice heard before any change is implemented into a release. 👍 -

It would literally be an inscription

It would literally be an inscription -

Inscription without the ordinal?

Inscription without the ordinal? -

-

The token is the ordinal

The token is the ordinal -

-

If you believe it

If you believe it -

-

People couldn’t wrap their heads around stamps at first. They didn’t get how the token was connected to the art. It’s no different than ordinals: it’s a shared belief

People couldn’t wrap their heads around stamps at first. They didn’t get how the token was connected to the art. It’s no different than ordinals: it’s a shared belief -

-

-

Everything is made up to some degree

Everything is made up to some degree -

-

Bitcoin is literally made up

Bitcoin is literally made up -

This!!!! Lol when people argue about stuff I often think about this and roll my eyes.

This!!!! Lol when people argue about stuff I often think about this and roll my eyes. -

This whole damned conversation is made up 😂

This whole damned conversation is made up 😂 -

Thanks for coming up with stamps mike... not only are you forcing important conversations and dev to happen on the bitcoin core level... your also forcing CP dev and conversations.... thanks to your simple "stamps" protocol... CP is (hopefully, if approved) going to get a new bolt-on decentralized file mirroring system.... can prolly implement it all in less than 50 lines of code 🙂

Thanks for coming up with stamps mike... not only are you forcing important conversations and dev to happen on the bitcoin core level... your also forcing CP dev and conversations.... thanks to your simple "stamps" protocol... CP is (hopefully, if approved) going to get a new bolt-on decentralized file mirroring system.... can prolly implement it all in less than 50 lines of code 🙂 - 15 April 2023 (3 messages)

-

Joined.

Joined. -

You belong in here man 🙂

You belong in here man 🙂 -

oh haha

oh haha - 16 April 2023 (5 messages)

-

How will taproot encoding compare with the segwit/bech32 encoding already in use by xcp?

How will taproot encoding compare with the segwit/bech32 encoding already in use by xcp? -

Re letting 2nd, 3rd, .. ,nth output triggering a dispense. Has the added parsing tume and scalability been analyzed?

Re letting 2nd, 3rd, .. ,nth output triggering a dispense. Has the added parsing tume and scalability been analyzed? -

allow triggering of dispensers by all tx outputs, not just the first output by pataegrillo · Pull Request #1222 · CounterpartyXCP/counterparty-lib

allow triggering of dispensers by all tx outputs, not just the first output by pataegrillo · Pull Request #1222 · CounterpartyXCP/counterparty-libCounterparty Protocol Reference Implementation. Contribute to CounterpartyXCP/counterparty-lib development by creating an account on GitHub.

-

Not sure what you mean… compare how? It’s just another encoding mechanism… what metric are you looking for specifically

Not sure what you mean… compare how? It’s just another encoding mechanism… what metric are you looking for specifically -

No, this change has not been thoroughly tested yet…. Feel free to do so yourself on testnet and report back your findings…. Shouldn’t add much overhead, but would be good to have the evidence to back up that belief👍🏻

No, this change has not been thoroughly tested yet…. Feel free to do so yourself on testnet and report back your findings…. Shouldn’t add much overhead, but would be good to have the evidence to back up that belief👍🏻

I’m pretty busy lately, so haven’t had time to test stuff for next cp release yet… prolly another couple weeks out before I can get back to testing the pending PRs… gotta get freewallet-desktop and dogewallet-desktop releases done to support stamps, then can focus back on CP dev/testing - 17 April 2023 (6 messages)

-

Size of btc tx (vbytes) / cntrprty message length.

Size of btc tx (vbytes) / cntrprty message length.

I suggest tests for 100 bytes, 500 bytes, 1000 byes of cntrprty msg.

Easiest would be a broadcast. If im not mistaken a N character broadcast makes a N+34 long cntrprty msg.

I dont run a node. Cool if someone who does, runs a test. -

Would need to test on mainnet though. My concern is that multi output btc tx are common. Counterparty without this upgrade can discard outputs after the 2nd.

Would need to test on mainnet though. My concern is that multi output btc tx are common. Counterparty without this upgrade can discard outputs after the 2nd.

I have no idea how much extra time it will take to check all outputs? <1ms/block? >1s/block? -

-

Welcome jamil!

Welcome jamil! -

-

Jamil has integrated psbts into gamma.io for ordinals trading, want to explore if/how they can be leveraged for counterparty asset trading

Jamil has integrated psbts into gamma.io for ordinals trading, want to explore if/how they can be leveraged for counterparty asset trading - 18 April 2023 (21 messages)

-

@jdogresorg is api.counterparty.io running this commit? https://github.com/CounterpartyXCP/counterparty-lib/pull/1228 because it returns correct values but my counterparty node fails with "insufficient btc" error for an address with available funds.Send change smaller than DUST to miners fee instead of error by pataegrillo · Pull Request #1228 · CounterpartyXCP/counterparty-lib

This fix adds a new parameter dust_size to the backend utxo sort function in order to change it from DEFAULT_MULTISIG_DUST_SIZE to DEFAULT_REGULAR_DUST_SIZE depending if the tx uses a "multisi...

-

it could be that your address has a lot of utxos

it could be that your address has a lot of utxos -

consolidating them should fix that issue

consolidating them should fix that issue -

it definitely has lots of utxos but it'll have more, shouldn't counterparty node pick the largest available? I'm more confused on why the public instance is working correctly and not the local one. who maintains that one?

-

jdog is the right person to ask, not sure why they’d be different

jdog is the right person to ask, not sure why they’d be different -

cool I'll wait for him, meanwhile I'll do more debug logging to see if it's something else but looks pretty similar...

-

its been an ongoing issue though, utxo selection is not great with the api

its been an ongoing issue though, utxo selection is not great with the api -

yeah many issues over the years, would make sense to fix the real cause

-

i think you can feed it utxos as a parameter

i think you can feed it utxos as a parameter -

I do for issuing assets but for send asset node needs to pick an available utxo to pay for fees, that's it.

-

gotcha, its probly something dumb thats causing the issue

gotcha, its probly something dumb thats causing the issue -

no kidding. I enabled debug logging and on next block it worked 😂

-

lol, perfect

lol, perfect -

I saw you wrote on the PR, the fix is in there, did you take a look at the commits?

I saw you wrote on the PR, the fix is in there, did you take a look at the commits? -

yes I did, I was about to pull the fix and use it but a simple restart somehow fixed my issue for now. will keep monitoring...

-

you are doing a tx after another without waiting for confirmation, right?

you are doing a tx after another without waiting for confirmation, right? -

No, in that case, there were multiple available utxos with 10+ confirmations but get_unspent_utxos was returning empty array

-

no, not running this PR on api.counterparty.io

no, not running this PR on api.counterparty.io -

will get to testing the current PRs and working towards a new CP release in the coming weeks

will get to testing the current PRs and working towards a new CP release in the coming weeks -

hrm... that sounds suspicious and should be reproducible... if you want to create a github issue for this, perhaps @pataegrillo can dig into it a bit more... if you have unspent utxos that are not pending, they should definitely be showing up in get_unspent_utxos

hrm... that sounds suspicious and should be reproducible... if you want to create a github issue for this, perhaps @pataegrillo can dig into it a bit more... if you have unspent utxos that are not pending, they should definitely be showing up in get_unspent_utxos -

Will save logs and open issue if I see the problem again.

- 19 April 2023 (71 messages)

-

hmmmm, ppl already trying to trigger a dispenser with taproot 😅. Soon, it'll be enabled

hmmmm, ppl already trying to trigger a dispenser with taproot 😅. Soon, it'll be enabled -

loool

loool -

oh no

oh no -

of course, those balances are part of the testing, they won't be in the real database

of course, those balances are part of the testing, they won't be in the real database -

Sharing this for transparency with other minting services. It's a completely opt-in feature, but if you choose to opt-in, please stay in compliance with what is laid-out in the documentation. Please reach-out with any questions. https://github.com/mikeinspace/stamps/blob/main/Key-Burn.mdstamps/Key-Burn.md at main · mikeinspace/stamps

Sharing this for transparency with other minting services. It's a completely opt-in feature, but if you choose to opt-in, please stay in compliance with what is laid-out in the documentation. Please reach-out with any questions. https://github.com/mikeinspace/stamps/blob/main/Key-Burn.mdstamps/Key-Burn.md at main · mikeinspace/stampsContribute to mikeinspace/stamps development by creating an account on GitHub.

-

@ordinariusprofessor @jdogresorg

@ordinariusprofessor @jdogresorg -

if you’re going to burn it anyway, why not store more data in that 3rd public key

if you’re going to burn it anyway, why not store more data in that 3rd public key -

That's a very good question... @B0BSmith

That's a very good question... @B0BSmith -

-

also makes me wonder if you could create larger m-of-n multisigs

also makes me wonder if you could create larger m-of-n multisigs -

yeah 1/3 rd of a unspendable stamp is the burn key ..would be nice if you could use it for different data for each output or data across that 3rd key output but counterparty api only allows you to specify a single dust return pubkey no matter how many outputs

yeah 1/3 rd of a unspendable stamp is the burn key ..would be nice if you could use it for different data for each output or data across that 3rd key output but counterparty api only allows you to specify a single dust return pubkey no matter how many outputs -

Every m-of-n combination is valid (up to n=20), but standardness rules only allow up to n=3.

Every m-of-n combination is valid (up to n=20), but standardness rules only allow up to n=3.

This limitation is enforced when sending (i.e., sending to a 2-of-4 multisig will not be considered standard). -

-

-

This is interesting. tit for tat.

This is interesting. tit for tat. -

@mikeinspace can you elaborate on why a library of burn addresses is important to keep track of? just for assurances and detecting so to apply badges reliably?

@mikeinspace can you elaborate on why a library of burn addresses is important to keep track of? just for assurances and detecting so to apply badges reliably? -

Its partially badges and also partially a "projection of intent" meaning if we always just use a single burn address it may become trivial for nodes to just prune that address out of the utxo set even though its technically "spendable". I consider this an extreme escalation which I don't think would happen, but by having more than one address we cycle through it signals that it will become a cat and mouse game if nodes start going down the path of pruning spendable outputs. Right now the burn addresses are very trivial and published, but we could use something in the txn itself to derive the burn addresses making the amount of compute nodes would need to dedicate to this cat and mouse game increase exponentially.

Its partially badges and also partially a "projection of intent" meaning if we always just use a single burn address it may become trivial for nodes to just prune that address out of the utxo set even though its technically "spendable". I consider this an extreme escalation which I don't think would happen, but by having more than one address we cycle through it signals that it will become a cat and mouse game if nodes start going down the path of pruning spendable outputs. Right now the burn addresses are very trivial and published, but we could use something in the txn itself to derive the burn addresses making the amount of compute nodes would need to dedicate to this cat and mouse game increase exponentially. -

yeah figured…. very interesting play.

yeah figured…. very interesting play. -

https://github.com/Jpja/XCP-Asset-Timeline/blob/main/js/burn_address.js.

https://github.com/Jpja/XCP-Asset-Timeline/blob/main/js/burn_address.js.

JS function for deciding whether an addr is a bufn address.

Could this come in handy?

I also have a py script for generating burn addresses.XCP-Asset-Timeline/burn_address.js at main · Jpja/XCP-Asset-TimelineMilestones & Asset Timelines for Counterparty & Dogeparty - XCP-Asset-Timeline/burn_address.js at main · Jpja/XCP-Asset-Timeline

-

maybe i’ll use my BURN asset to list deprecated burn addresses and list encrypted active burn addresses (decrypt via minter scripts?). Burn Address Rotation (BAR)

maybe i’ll use my BURN asset to list deprecated burn addresses and list encrypted active burn addresses (decrypt via minter scripts?). Burn Address Rotation (BAR) -

burn incentive token lol

burn incentive token lol -

send BURN to burn addresses and pull holders for list, thats perfect

send BURN to burn addresses and pull holders for list, thats perfect -

ive seen people try to pass off vanity addresses as burn addresses before tho, so it is a grey area

ive seen people try to pass off vanity addresses as burn addresses before tho, so it is a grey area -

Isn’t there a certain character threshold after which it’s almost impossible that it would be a vanity?

Isn’t there a certain character threshold after which it’s almost impossible that it would be a vanity? -

just say english words or repeating characters up to checksum

just say english words or repeating characters up to checksum -

theres no reason to create a half burn address

theres no reason to create a half burn address -

yup... the repeating character is key...

yup... the repeating character is key... -

1JDogZS6tQcSxwfxhv6XKKjcyicYA4Feev

1JDogZS6tQcSxwfxhv6XKKjcyicYA4Feev

1JDogXXXXXXXXXXXXXXXXXXXXXXXX4Feev -

pretty obvious with the XXX in there that it is a burn address... think we should require a minimum of 3 Xs before the checksum... not sure we can rely on english words... some burn addresses may contain things that are not in dictionaries (like JDog)

pretty obvious with the XXX in there that it is a burn address... think we should require a minimum of 3 Xs before the checksum... not sure we can rely on english words... some burn addresses may contain things that are not in dictionaries (like JDog) -

1SomeReallyLongBurnStringXXXX4Feev

1SomeReallyLongBurnStringXXXX4Feev -

also could support segwit/bech32 burn addresses if you wanted a bit more space to allow for longer custom string 🙂

also could support segwit/bech32 burn addresses if you wanted a bit more space to allow for longer custom string 🙂 -

TP's Go Bitcoin Tests

TP's Go Bitcoin TestsBitcoin Go Unit Tester

-

If burn address is obvious it would have short life in this context. so BAR seems important. Maybe rotate burn address every n blocks.

If burn address is obvious it would have short life in this context. so BAR seems important. Maybe rotate burn address every n blocks. -

-

-

-

STAMP Protocol CIP by jdogresorg · Pull Request #69 · CounterpartyXCP/cips

STAMP Protocol CIP by jdogresorg · Pull Request #69 · CounterpartyXCP/cipsCounterparty Improvement Proposals. Contribute to CounterpartyXCP/cips development by creating an account on GitHub.

-

cips/cip-0026.md at master · jdogresorg/cips

cips/cip-0026.md at master · jdogresorg/cipsCounterparty Improvement Proposals. Contribute to jdogresorg/cips development by creating an account on GitHub.

-

I spent some time writing up a "STAMP Protocol" CIP which I just submitted a PR on... please feel free to weigh in with your comments here or on the github pull request. 🙂

I spent some time writing up a "STAMP Protocol" CIP which I just submitted a PR on... please feel free to weigh in with your comments here or on the github pull request. 🙂 -

My algo should be able to distinguish vanity from burn addr. .

My algo should be able to distinguish vanity from burn addr. .

That said, it may fail at detecting a burn address, but never accept a real address as a burn addr. -

How can anything but a human determine a burn address? Isn't the whole point that a pattern is only meaningful to a human?

How can anything but a human determine a burn address? Isn't the whole point that a pattern is only meaningful to a human? -

id say you can have a fairly large certainty threshold

id say you can have a fairly large certainty threshold -

It must have a pattern statistically infeasible to brute force.

It must have a pattern statistically infeasible to brute force.

With my heuristics that means (roughly)

- a consecutive run of same character of 11

- top 10,000 english word of length 12

Threshold can also be made stricter. -

-

My point exactly

My point exactly -

A human would see that as a burn address but a machine would miss it

A human would see that as a burn address but a machine would miss it -

-

either way, dont you anticipate the need to rotate if expecting counter-measures?

either way, dont you anticipate the need to rotate if expecting counter-measures?

it wont be humans detecting but resistant code. -

I think it’s probably enough to project intent. We’ll deal with escalation if it comes to that.

I think it’s probably enough to project intent. We’ll deal with escalation if it comes to that. -

-

But there is also a cost on our end when adding more complexity so I’d rather project intent until we need to outsmart the cat

But there is also a cost on our end when adding more complexity so I’d rather project intent until we need to outsmart the cat -

so you want burn addresses so the outputs cant be spent and also for node operators to not be able to filter them out?

so you want burn addresses so the outputs cant be spent and also for node operators to not be able to filter them out? -

Yes

Yes -

how is it that YOU would know but THEY wouldnt?

how is it that YOU would know but THEY wouldnt? -

unless you kept the list secret

unless you kept the list secret -

The addresses are published. It’s not that they wouldn’t know but that it might be too much of a pain to bother

The addresses are published. It’s not that they wouldn’t know but that it might be too much of a pain to bother -

like a secret book

like a secret book -

secret book of burns

secret book of burns -

you’ll have to come up with a better name obvi

you’ll have to come up with a better name obvi -

The point is that it’s not “one and done” so why even bother pruning any if we can just add more. We’re planning on people’s natural inertia

The point is that it’s not “one and done” so why even bother pruning any if we can just add more. We’re planning on people’s natural inertia -

Plus it’s a bit of memetic warfare

Plus it’s a bit of memetic warfare -

-

Is it even a “node” if it prunes spendable outputs? I’d argue it isn’t

Is it even a “node” if it prunes spendable outputs? I’d argue it isn’t -

-

What’s a spendable output?

What’s a spendable output? -

An output that can be spent, I’d argue that even a burn address can be spent if you have a few billion years to grind away at it

An output that can be spent, I’d argue that even a burn address can be spent if you have a few billion years to grind away at it -

-

-

the thing is if you can spend a burn address output then ECC is broken

the thing is if you can spend a burn address output then ECC is broken -

so it really isn’t spendable

so it really isn’t spendable -

- 20 April 2023 (112 messages)

-

It’s unspendable enough that it would be reasonable for nodes to have the option to prune it

It’s unspendable enough that it would be reasonable for nodes to have the option to prune it -

A few steps still need to be taken: be aware of Bitcoin Stamps; be motivated enough to do something beyond angry tweets; investigate it enough to find the github containing the burn addresses; be technically competant enough to prune them; retain enough interest in this whole endevour to stay on top of future burn addresses released;

A few steps still need to be taken: be aware of Bitcoin Stamps; be motivated enough to do something beyond angry tweets; investigate it enough to find the github containing the burn addresses; be technically competant enough to prune them; retain enough interest in this whole endevour to stay on top of future burn addresses released;

Sure there could be one really motivated person who also has the competenance to create a Bitcoin Core "blacklist" patch and distribute it broadly.

But the point I'm trying to make is that none of this is as trivial as it seems. All of the above requires some amount of work vs doing nothing. -

obviously do nothing is the easiest and its only an issue if its an issue, but actually building a -pruneburns flag is pretty straightforward, i could see bitcoin knots implementing

obviously do nothing is the easiest and its only an issue if its an issue, but actually building a -pruneburns flag is pretty straightforward, i could see bitcoin knots implementing -

well yes Luke will lol

well yes Luke will lol -

Do we know how many knots nodes there are

Do we know how many knots nodes there are -

we should pay luke to build it

we should pay luke to build it -

honestly, if stamps gets to the point where knots has a blacklist, I would consider that a huge success

honestly, if stamps gets to the point where knots has a blacklist, I would consider that a huge success -

Not pruning is almost worse, its indifference.

Not pruning is almost worse, its indifference. -

i think the inevitable outcomes is tx accumulators, something like utreexo

i think the inevitable outcomes is tx accumulators, something like utreexo -

if stamps gets pruned in 5 years I am okay with that outcome

if stamps gets pruned in 5 years I am okay with that outcome -

because eventually “illegal” data will be added to the chain so nodes need a way to validate and forget

because eventually “illegal” data will be added to the chain so nodes need a way to validate and forget -

its already there. that would be a hard sell in my view. but could happen I guess

its already there. that would be a hard sell in my view. but could happen I guess -

utxo accumulators get us closer to the promise of spv

utxo accumulators get us closer to the promise of spv -